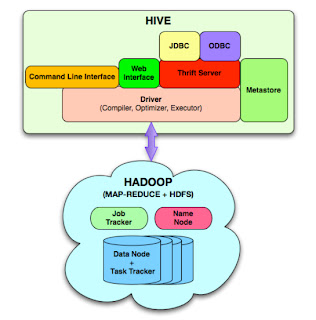

Hive is an abstraction on top of MapReduce it allows users to query data in the Hadoop cluster without knowing Java or MapReduce.It Uses the HiveQL language,Very similar to SQL.

8 Points about Hive:-

- Hive was originally developed at Facebook

- Provides a very SQL-like language

- Can be used by people who know SQL

- Enabling Hive requires almost no extra work by the system administrator

- Hive ‘layers’ table definitions on top of data in HDFS

- Hive tables are stored in Hive’s ‘warehouse’ directory in HDFS, By default, /user/hive/warehouse

- Tables are stored in subdirectories of the warehouse directory

- Actual data is stored in flat files- Control character-delimited text, or SequenceFiles

- SQL like Queries

- SHOW TABLES, DESCRIBE, DROPTABLE

- CREATE TABLE, ALTER TABLE

- SELECT, INSERT

- Not all ‘standard’ SQL is supported

- No support for UPDATE or DELETE

- No support for INSERTing single rows

- Relatively limited number of built-in functions

- No datatypes for date or time - Use the STRING datatype instead.In new version date or time datatype will support.